The 150,000 square foot rooftop array uses CSUN modules, Solectria inverters, and PVKIT rail-less racking hardware and mini clamps from S-5! Inc. Parallel Products' 1.9 MW rooftop solar array in New Bedford, Mass., Image: S-5, Inc. Parallel Products, a consumer...

News & Announcements

Scientists develop stable sodium battery technology

Replacing lithium and cobalt in lithium-ion batteries would result in a more environmentally and socially conscious technology, scientists say. Toward that end, University of Texas at Austin researchers, funded in part by the U.S. National Science Foundation, have...

The past, the present and the future of Women in BIM

Rebecca De Cicco, global chair and founder of Women in BIM, discusses the group’s evolution over the past decade – and what lies ahead as it supports women across the globe to become part of a digital-first industry Digital impacts everything we do – from the homes we...

Bentley Systems launches “Phase 2” of the infrastructure metaverse

Bentley Systems, the infrastructure engineering software giant, launched phase 2 of the infrastructure metaverse at its Year in Infrastructure conference in London. This new phase includes many enhancements intended to bridge gaps between data processes in information...

The 10 most future-ready cities in North America

Cities must invest more in digital and physical infrastructure to become more inclusive, resilient, sustainable and safe, according to a recently released report by ThoughtLab, a marketing and economic research company, and Hatch, a consulting firm. jamesteohart via...

SMART Competition – Session 1

The SMART Competition is opening Session 1 for team registration. Teams are reminded that even though there are no official competition beginning or ending dates, teams may select which session in which to compete. Competition Session 1: December 1 – April 1...

Bentley Systems and Genesys International partner to provide 3D mapping for Indian cities

New Delhi: Bentley Systems, Incorporated, an infrastructure engineering software company, and Genesys International, a pioneer in advanced mapping and geospatial content services, announced that Genesys’s 3D City Digital Twin Solution for Urban India – the first city...

Builders face security and privacy risks as BIM takes off

As state and federal agencies tighten cybersecurity regulations on projects, here’s how contractors and subs can comply. ljubaphoto via Getty Images While leveraging BIM brings many benefits, it also comes with unique challenges for contractors trying to land civil...

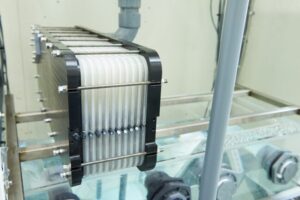

Zinc8 to manufacture its first zinc-air batteries in the U.S.

Canadian battery developer Zinc8 Energy Solutions announced its plans to begin battery production in the United States Image: Zinc8 Zinc8 Energy Solutions Inc. announced that the company’s inaugural commercial production facility will be based in Ulster County in New...

Comparing performance of transparent solar windows to traditional windows

Comparing performance of transparent solar windows to traditional windows The results of the study by Wells Fargo Foundation and NREL initiative showed that PV-coated windows can appreciably lower the solar heat gain coefficient. NEXT Energy Technology executives...

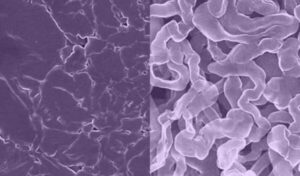

High-performance aqueous calcium-ion battery

Researchers from Rensselaer Polytechnic Institute in the United States have developed a special class of materials for bulky calcium ions, providing pathways for their facile insertion into battery electrodes. Image: RPI Against a backdrop of soaring prices and...

New photovoltaic tiles from Estonia

Estonian startup Solarstone has developed two solar tiles with an efficiency of up to 19.5% and an operating temperature coefficient of -0.41% per C. It recently secured €10 million in funds to expand sales across Europe. Image: Solarstone Estonian startup Solarstone...